Imaging a Black Hole using only Closure Quantities

In this tutorial, we will create a preliminary reconstruction of the 2017 M87 data on April 6 using closure-only imaging. This tutorial is a general introduction to closure-only imaging in Comrade. For an introduction to simultaneous image and instrument modeling, see Stokes I Simultaneous Image and Instrument Modeling

Introduction to Closure Imaging

The EHT is one of the highest-resolution telescope ever created. Its resolution is equivalent to roughly tracking a hockey puck on the moon when viewing it from the earth. However, the EHT is also a unique interferometer. First, EHT data is incredibly sparse, the array is formed from only eight geographic locations around the planet. Second, the obseving frequency is much higher than traditional VLBI. Lastly, each site in the array is unique. They have different dishes, recievers, feeds, and electronics. Putting this all together implies that many of the common imaging techniques struggle to fit the EHT data and explore the uncertainty in both the image and instrument. One way to deal with some of these uncertainties is to not directly fit the data but instead fit closure quantities, which are independent of many of the instrumental effects that plague the data. The types of closure quantities are briefly described in Introduction to the VLBI Imaging Problem.

In this tutorial, we will do closure-only modeling of M87 to produce a posterior of images of M87.

To get started, we will load Comrade

using ComradePyehtim loads eht-imaging using PythonCall this is necessary to load uvfits files currently.

using Pyehtim CondaPkg Found dependencies: /home/runner/.julia/packages/DimensionalData/hv9KC/CondaPkg.toml

CondaPkg Found dependencies: /home/runner/.julia/packages/CondaPkg/0UqYV/CondaPkg.toml

CondaPkg Found dependencies: /home/runner/.julia/packages/PythonCall/83z4q/CondaPkg.toml

CondaPkg Found dependencies: /home/runner/.julia/packages/Pyehtim/bQtHC/CondaPkg.toml

CondaPkg Resolving changes

+ ehtim (pip)

+ libstdcxx

+ libstdcxx-ng

+ numpy

+ numpy (pip)

+ openssl

+ pandas

+ python

+ setuptools (pip)

+ uv

+ xarray

CondaPkg Initialising pixi

│ /home/runner/.julia/artifacts/cefba4912c2b400756d043a2563ef77a0088866b/bin/pixi

│ init

│ --format pixi

└ /home/runner/work/Comrade.jl/Comrade.jl/examples/intermediate/ClosureImaging/.CondaPkg

✔ Created /home/runner/work/Comrade.jl/Comrade.jl/examples/intermediate/ClosureImaging/.CondaPkg/pixi.toml

CondaPkg Wrote /home/runner/work/Comrade.jl/Comrade.jl/examples/intermediate/ClosureImaging/.CondaPkg/pixi.toml

│ [dependencies]

│ openssl = ">=3, <3.6, >=3, <3.6"

│ libstdcxx = ">=3.4,<15.0"

│ uv = ">=0.4"

│ libstdcxx-ng = ">=3.4,<15.0"

│ pandas = "<2"

│ xarray = "*"

│ numpy = ">=1.24, <2.0"

│

│ [dependencies.python]

│ channel = "conda-forge"

│ build = "*cp*"

│ version = ">=3.10,!=3.14.0,!=3.14.1,<4, >=3.6,<=3.12"

│

│ [project]

│ name = ".CondaPkg"

│ platforms = ["linux-64"]

│ channels = ["conda-forge"]

│ channel-priority = "strict"

│ description = "automatically generated by CondaPkg.jl"

│

│ [pypi-dependencies]

│ ehtim = ">=1.2.10, <2.0"

│ numpy = ">=1.24, <2.0"

└ setuptools = "*"

CondaPkg Installing packages

│ /home/runner/.julia/artifacts/cefba4912c2b400756d043a2563ef77a0088866b/bin/pixi

│ install

└ --manifest-path /home/runner/work/Comrade.jl/Comrade.jl/examples/intermediate/ClosureImaging/.CondaPkg/pixi.toml

✔ The default environment has been installed.

/home/runner/work/Comrade.jl/Comrade.jl/examples/intermediate/ClosureImaging/.CondaPkg/.pixi/envs/default/lib/python3.11/site-packages/ehtim/__init__.py:58: UserWarning: pkg_resources is deprecated as an API. See https://setuptools.pypa.io/en/latest/pkg_resources.html. The pkg_resources package is slated for removal as early as 2025-11-30. Refrain from using this package or pin to Setuptools<81.

import pkg_resourcesFor reproducibility we use a stable random number genreator

using StableRNGs

rng = StableRNG(123)StableRNGs.LehmerRNG(state=0x000000000000000000000000000000f7)Load the Data

To download the data visit https://doi.org/10.25739/g85n-f134 To load the eht-imaging obsdata object we do:

obs = ehtim.obsdata.load_uvfits(joinpath(__DIR, "..", "..", "Data", "SR1_M87_2017_096_lo_hops_netcal_StokesI.uvfits"))Python: <ehtim.obsdata.Obsdata object at 0x7f318fedc110>Now we do some minor preprocessing:

Scan average the data since the data have been preprocessed so that the gain phases are coherent.

Add 2% systematic noise to deal with calibration issues such as leakage.

obs = scan_average(obs).add_fractional_noise(0.02)Python: <ehtim.obsdata.Obsdata object at 0x7f318fd51710>Now, we extract our closure quantities from the EHT data set. We flag now SNR points since the closure likelihood we use is only applicable to high SNR data.

dlcamp, dcphase = extract_table(obs, LogClosureAmplitudes(; snrcut = 3), ClosurePhases(; snrcut = 3))(EHTObservationTable{Comrade.EHTLogClosureAmplitudeDatum{:I}}

source: M87

mjd: 57849

bandwidth: 1.856e9

sites: [:AA, :AP, :AZ, :JC, :LM, :PV, :SM]

nsamples: 128, EHTObservationTable{Comrade.EHTClosurePhaseDatum{:I}}

source: M87

mjd: 57849

bandwidth: 1.856e9

sites: [:AA, :AP, :AZ, :JC, :LM, :PV, :SM]

nsamples: 152)Note

Fitting low SNR closure data is complicated and requires a more sophisticated likelihood. If low-SNR data is very important we recommend fitting visibilties with a instrumental model.

Build the Model/Posterior

For our model, we will be using an image model that consists of a raster of point sources, convolved with some pulse or kernel to make a ContinuousImage. To define this model we define the standard two argument function sky that defines the sky model we want to fit. The first argument are the model parameters, and are typically a NamedTuple. The second argument defines the metadata for the model that is typically constant. For our model the constant metdata will just by the mean or prior image.

function sky(θ, metadata)

(; fg, c, σimg) = θ

(; mimg) = metadata

# Apply the GMRF fluctuations to the image

rast = apply_fluctuations(CenteredLR(), mimg, σimg .* c.params)

m = ContinuousImage(((1 - fg)) .* rast, BSplinePulse{3}())

# Force the image centroid to be at the origin

# Add a large-scale gaussian to deal with the over-resolved mas flux

g = modify(Gaussian(), Stretch(μas2rad(250.0), μas2rad(250.0)), Renormalize(fg))

return m + g

endsky (generic function with 1 method)Now, let's set up our image model. The EHT's nominal resolution is 20-25 μas. Additionally, the EHT is not very sensitive to a larger field of views; typically, 60-80 μas is enough to describe the compact flux of M87. Given this, we only need to use a small number of pixels to describe our image.

npix = 32

fovxy = μas2rad(150.0)7.27220521664304e-10To define the image model we need to specify both the grid we will be using and the FT algorithm we will use, in this case the NFFT which is the most efficient.

grid = imagepixels(fovxy, fovxy, npix, npix)RectiGrid(

executor: ComradeBase.Serial()

Dimensions:

(↓ X Sampled{Float64} LinRange{Float64}(-3.5224744018114725e-10, 3.5224744018114725e-10, 32) ForwardOrdered Regular Points,

→ Y Sampled{Float64} LinRange{Float64}(-3.5224744018114725e-10, 3.5224744018114725e-10, 32) ForwardOrdered Regular Points)

)Now we need to specify our image prior. For this work we will use a Gaussian Markov Random field prior

using VLBIImagePriors, DistributionsSince we are using a Gaussian Markov random field prior we need to first specify our mean image. For this work we will use a symmetric Gaussian with a FWHM of 50 μas

fwhmfac = 2 * sqrt(2 * log(2))

mpr = modify(Gaussian(), Stretch(μas2rad(50.0) ./ fwhmfac))

imgpr = intensitymap(mpr, grid)

skymeta = (; mimg = imgpr ./ flux(imgpr));Now we can finally form our image prior. For this we use a heirarchical prior where the direct log-ratio image prior is a Gaussian Markov Random Field. The correlation length of the GMRF is a hyperparameter that is fit during imaging. We pass the data to the prior to estimate what the maximumal resolutoin of the array is and prevent the prior from allowing correlation lengths that are much small than the telescope beam size. Note that this GMRF prior has unit variance. For more information on the GMRF prior see the corr_image_prior doc string.

cprior = corr_image_prior(grid, dlcamp)HierarchicalPrior(

map:

ConditionalMarkov(

Random Field: VLBIImagePriors.GaussMarkovRandomField

Graph: MarkovRandomFieldGraph{1}(

dims: (32, 32)

)

) hyper prior:

Truncated(Distributions.InverseGamma{Float64}(

invd: Distributions.Gamma{Float64}(α=1.0, θ=0.0407685911951416)

θ: 24.528686684644875

)

; lower=1.0, upper=64.0)

)Putting everything together the total prior is then our image prior, a prior on the standard deviation of the MRF, and a prior on the fractional flux of the Gaussian component.

prior = (c = cprior, σimg = Exponential(0.1), fg = Uniform(0.0, 1.0))(c = HierarchicalPrior(

map:

ConditionalMarkov(

Random Field: VLBIImagePriors.GaussMarkovRandomField

Graph: MarkovRandomFieldGraph{1}(

dims: (32, 32)

)

) hyper prior:

Truncated(Distributions.InverseGamma{Float64}(

invd: Distributions.Gamma{Float64}(α=1.0, θ=0.0407685911951416)

θ: 24.528686684644875

)

; lower=1.0, upper=64.0)

)

, σimg = Distributions.Exponential{Float64}(θ=0.1), fg = Distributions.Uniform{Float64}(a=0.0, b=1.0))We can then define our sky model.

skym = SkyModel(sky, prior, grid; metadata = skymeta)SkyModel

with map: sky

on grid:

RectiGrid(

executor: ComradeBase.Serial()

Dimensions:

(↓ X Sampled{Float64} LinRange{Float64}(-3.5224744018114725e-10, 3.5224744018114725e-10, 32) ForwardOrdered Regular Points,

→ Y Sampled{Float64} LinRange{Float64}(-3.5224744018114725e-10, 3.5224744018114725e-10, 32) ForwardOrdered Regular Points)

)

)Since we are fitting closures we do not need to include an instrument model, since the closure likelihood is approximately independent of gains in the high SNR limit.

using Enzyme

post = VLBIPosterior(skym, dlcamp, dcphase)VLBIPosterior

ObservedSkyModel

with map: sky

on grid:

FourierDualDomain(

Algorithm: VLBISkyModels.NFFTAlg{Float64, AbstractNFFTs.PrecomputeFlags, UInt32}(1, 1.0e-9, AbstractNFFTs.TENSOR, 0x00000000)

Image Domain: RectiGrid(

executor: ComradeBase.Serial()

Dimensions:

(↓ X Sampled{Float64} LinRange{Float64}(-3.5224744018114725e-10, 3.5224744018114725e-10, 32) ForwardOrdered Regular Points,

→ Y Sampled{Float64} LinRange{Float64}(-3.5224744018114725e-10, 3.5224744018114725e-10, 32) ForwardOrdered Regular Points)

)

Visibility Domain: UnstructuredDomain(

executor: ComradeBase.Serial()

Dimensions:

274-element StructArray(::Vector{Float64}, ::Vector{Float64}, ::Vector{Float64}, ::Vector{Float64}) with eltype @NamedTuple{U::Float64, V::Float64, Ti::Float64, Fr::Float64}:

(U = -4.405690154666661e9, V = -4.523017159111106e9, Ti = 0.9166666567325592, Fr = 2.27070703125e11)

(U = 787577.6145833326, V = -1.6838098888888871e6, Ti = 1.2166666388511658, Fr = 2.27070703125e11)

(U = -4.444299918222218e9, V = -4.597825294222218e9, Ti = 1.2166666388511658, Fr = 2.27070703125e11)

(U = 1.337045162666665e9, V = -3.765300401777774e9, Ti = 1.2166666388511658, Fr = 2.27070703125e11)

(U = -1.336260540444443e9, V = 3.763616127999996e9, Ti = 1.2166666388511658, Fr = 2.27070703125e11)

(U = 4.445088654222218e9, V = 4.596145080888884e9, Ti = 1.2166666388511658, Fr = 2.27070703125e11)

(U = 5.781345607111105e9, V = 8.325259893333325e8, Ti = 1.2166666388511658, Fr = 2.27070703125e11)

(U = 757554.6649305547, V = -1.6707483020833314e6, Ti = 1.516666665673256, Fr = 2.27070703125e11)

(U = 1.4806382151111097e9, V = -3.741479615999996e9, Ti = 1.516666665673256, Fr = 2.27070703125e11)

(U = -4.455366328888884e9, V = -4.673060451555551e9, Ti = 1.516666665673256, Fr = 2.27070703125e11)

(U = -1.4798758791111097e9, V = 3.739809735111107e9, Ti = 1.516666665673256, Fr = 2.27070703125e11)

(U = 4.456123861333328e9, V = 4.671391715555551e9, Ti = 1.516666665673256, Fr = 2.27070703125e11)

(U = 5.936013027555549e9, V = 9.315912497777768e8, Ti = 1.516666665673256, Fr = 2.27070703125e11)

(U = 722830.7065972214, V = -1.6582321493055536e6, Ti = 1.816666603088379, Fr = 2.27070703125e11)

(U = -4.438811278222218e9, V = -4.748261176888884e9, Ti = 1.816666603088379, Fr = 2.27070703125e11)

(U = 1.615060401777776e9, V = -3.715306943999996e9, Ti = 1.816666603088379, Fr = 2.27070703125e11)

(U = -1.6143345528888872e9, V = 3.713649343999996e9, Ti = 1.816666603088379, Fr = 2.27070703125e11)

(U = 4.439536184888884e9, V = 4.746598001777773e9, Ti = 1.816666603088379, Fr = 2.27070703125e11)

(U = 6.053865329777771e9, V = 1.0329465084444433e9, Ti = 1.816666603088379, Fr = 2.27070703125e11)

(U = 683620.5937499993, V = -1.6463396631944429e6, Ti = 2.1166666746139526, Fr = 2.27070703125e11)

(U = -4.394744988444439e9, V = -4.822934485333328e9, Ti = 2.1166666746139526, Fr = 2.27070703125e11)

(U = 1.7394626631111093e9, V = -3.686947740444441e9, Ti = 2.1166666746139526, Fr = 2.27070703125e11)

(U = -1.7387777884444425e9, V = 3.6853017173333297e9, Ti = 2.1166666746139526, Fr = 2.27070703125e11)

(U = 4.395427669333329e9, V = 4.821289443555551e9, Ti = 2.1166666746139526, Fr = 2.27070703125e11)

(U = 6.134203007999993e9, V = 1.1359907128888876e9, Ti = 2.1166666746139526, Fr = 2.27070703125e11)

(U = -1.8643712995555537e9, V = 3.651452543999996e9, Ti = 2.449999988079071, Fr = 2.27070703125e11)

(U = 6.178850375111104e9, V = 1.2516714417777765e9, Ti = 2.449999988079071, Fr = 2.27070703125e11)

(U = 4.314477112888885e9, V = 4.903116785777773e9, Ti = 2.449999988079071, Fr = 2.27070703125e11)

(U = 587276.6180555549, V = -1.6236182569444429e6, Ti = 2.7500000596046448, Fr = 2.27070703125e11)

(U = 1.9658264106666646e9, V = -3.6206990791111073e9, Ti = 2.7500000596046448, Fr = 2.27070703125e11)

(U = -4.212778346666662e9, V = -4.976839239111106e9, Ti = 2.7500000596046448, Fr = 2.27070703125e11)

(U = -1.965238759111109e9, V = 3.6190756053333297e9, Ti = 2.7500000596046448, Fr = 2.27070703125e11)

(U = 6.178607487999993e9, V = 1.356139594666665e9, Ti = 2.7500000596046448, Fr = 2.27070703125e11)

(U = 4.213364039111107e9, V = 4.975216568888884e9, Ti = 2.7500000596046448, Fr = 2.27070703125e11)

(U = 535806.2881944438, V = -1.6141240694444429e6, Ti = 3.0500001311302185, Fr = 2.27070703125e11)

(U = 2.0544588266666646e9, V = -3.586710200888885e9, Ti = 3.0500001311302185, Fr = 2.27070703125e11)

(U = -4.085601863111107e9, V = -5.046995640888884e9, Ti = 3.0500001311302185, Fr = 2.27070703125e11)

(U = -2.0539194026666646e9, V = 3.5850976213333297e9, Ti = 3.0500001311302185, Fr = 2.27070703125e11)

(U = 4.0861423288888845e9, V = 5.045379299555551e9, Ti = 3.0500001311302185, Fr = 2.27070703125e11)

(U = 6.140063729777771e9, V = 1.4602817422222207e9, Ti = 3.0500001311302185, Fr = 2.27070703125e11)

(U = 481010.2230902773, V = -1.6055275520833316e6, Ti = 3.3499998450279236, Fr = 2.27070703125e11)

(U = 2.130349845333331e9, V = -3.5513328995555515e9, Ti = 3.3499998450279236, Fr = 2.27070703125e11)

(U = -3.933104519111107e9, V = -5.114784767999995e9, Ti = 3.3499998450279236, Fr = 2.27070703125e11)

(U = -2.1298682808888867e9, V = 3.5497276302222185e9, Ti = 3.3499998450279236, Fr = 2.27070703125e11)

(U = 6.063457521777771e9, V = 1.5634511359999983e9, Ti = 3.3499998450279236, Fr = 2.27070703125e11)

(U = 3.9335861404444404e9, V = 5.113178993777773e9, Ti = 3.3499998450279236, Fr = 2.27070703125e11)

(U = 416648.93055555515, V = -1.5970948472222206e6, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = 2.578938929777775e9, V = -4.734788238222218e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = -3.735105663999996e9, V = -5.186825713777772e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = 2.1991734328888865e9, V = -3.5106569031111073e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = -2.5785191964444413e9, V = 4.733192191999995e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = -2.198756124444442e9, V = 3.509060273777774e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = 3.797641537777774e8, V = -1.2241308906666653e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = 3.735526620444441e9, V = 5.185227192888883e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = 6.314048611555549e9, V = 4.520333351111106e8, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = 5.934280931555549e9, V = 1.6761668835555537e9, Ti = 3.6833333373069763, Fr = 2.27070703125e11)

(U = 364284.04464285675, V = -1.591376535714284e6, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = -3.5324296035555515e9, V = -5.248264732444439e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = 2.246733795555553e9, V = -3.4730721351111073e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = 2.7040352142222195e9, V = -4.690125752888884e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = -2.687901513142854e9, V = 4.694663167999995e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = -2.2408562468571405e9, V = 3.476569380571425e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = 4.573011991111106e8, V = -1.2170540408888876e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = 3.561408036571425e9, V = 5.238641298285708e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = 5.779150734222217e9, V = 1.7751998862222204e9, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = 6.236464440888882e9, V = 5.581377528888882e8, Ti = 3.98333340883255, Fr = 2.27070703125e11)

(U = 292810.0842803027, V = -1.5850529772727257e6, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = 2.81236712533333e9, V = -4.643490716444439e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = 2.280368668444442e9, V = -3.43479938133333e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = -3.307854179555552e9, V = -5.306092316444439e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = -2.812399111529409e9, V = 4.641748931764702e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = -2.280159088941174e9, V = 3.43308967905882e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = 5.3200019644444394e8, V = -1.2086904924444432e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = 3.307383679999996e9, V = 5.304687194352936e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = 6.120220771555549e9, V = 6.626024835555549e8, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = 5.588211185777772e9, V = 1.8712975182222202e9, Ti = 4.283333480358124, Fr = 2.27070703125e11)

(U = 238138.85156249977, V = -1.5812476874999984e6, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 5.092881095111105e9, V = -4.199598421333329e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 2.903268209777775e9, V = -4.595170062222218e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 2.299867178666664e9, V = -3.396077226666663e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = -3.062767004444441e9, V = -5.359950862222217e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 5.050181503999994e9, V = -4.210810559999995e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = -2.8907034879999967e9, V = 4.600886271999995e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 2.189606492444442e9, V = 3.955690115555551e8, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = -2.2976930559999976e9, V = 3.400280575999996e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 2.79301371733333e9, V = -8.035218719999992e8, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 6.034046399999994e8, V = -1.199091349333332e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 3.1008046079999967e9, V = 5.350639359999994e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 8.155639423999991e9, V = 1.1603480675555544e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 5.966032455111105e9, V = 7.64783736888888e8, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 5.36262916266666e9, V = 1.963875015111109e9, Ti = 4.583333194255829, Fr = 2.27070703125e11)

(U = 163232.87499999983, V = -1.5773965972222206e6, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 5.388079544888883e9, V = -4.101135303111107e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 2.3048084479999976e9, V = -3.3528182257777743e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = -2.768253631999997e9, V = -5.414730879999994e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 5.388055679999994e9, V = -4.101249678222218e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 2.9831417315555525e9, V = -4.539868501333328e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 5.356877311999994e9, V = -4.110922695111107e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = -2.975202047999997e9, V = 4.544589937777773e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 2.4049356302222195e9, V = 4.387324826666662e8, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 2.4049011413333306e9, V = 4.3861474933333284e8, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = -2.305032220444442e9, V = 3.356104334222219e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 3.083277411555552e9, V = -7.48315953777777e8, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 3.0832264319999967e9, V = -7.484370453333325e8, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 6.783317208888881e8, V = -1.187050453333332e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 2.8027259164444413e9, V = 5.407289457777772e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 8.156328149333324e9, V = 1.3135985066666653e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 8.156297400888881e9, V = 1.3134812622222207e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 5.751382712888883e9, V = 8.74867279999999e8, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 5.073054606222218e9, V = 2.0619144959999979e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = -5.356795278222217e9, V = 4.1110577777777734e9, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 30750.899088541635, V = 116905.95138888876, Ti = 4.916666686534882, Fr = 2.27070703125e11)

(U = 99881.10751488084, V = -1.575297547619046e6, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 5.59465437866666e9, V = -4.018611114666662e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 3.030624810666663e9, V = -4.494683207111106e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 2.2960602239999976e9, V = -3.3182473599999967e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 5.594626830222217e9, V = -4.0187286115555515e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = -2.5172720142222195e9, V = -5.45444669155555e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 5.579493814857137e9, V = -4.023605065142853e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = -3.0274068479999967e9, V = 4.496662674285709e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 2.5640353279999976e9, V = 4.7607165511111057e8, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 2.564000689777775e9, V = 4.7595243111111057e8, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = -2.2970926933333306e9, V = 3.3193651078095202e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 3.2985927679999967e9, V = -7.003643235555549e8, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 7.345665155555549e8, V = -1.1764336924444432e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 3.2985603128888855e9, V = -7.004829137777771e8, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 2.5374800944761877e9, V = 5.44992148723809e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 8.111924807111103e9, V = 1.4358354488888874e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 4.813340984888884e9, V = 2.136196949333331e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 5.547893447111105e9, V = 9.597677279999989e8, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 8.111898709333324e9, V = 1.4357162488888874e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = -5.579455049142851e9, V = 4.0237272990476146e9, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 27098.63357204858, V = 117340.62348090265, Ti = 5.183333337306976, Fr = 2.27070703125e11)

(U = 45690.53906249995, V = -1.5743099999999984e6, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 5.773825464888883e9, V = -3.933187214222218e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 2.2760630257777753e9, V = -3.283890659555552e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 3.0632664319999967e9, V = -4.448890581333328e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 5.773791857777772e9, V = -3.9333102648888845e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = -2.253957283555553e9, V = -5.490298367999994e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 5.744240127999994e9, V = -3.946954495999996e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = -3.0586252799999967e9, V = 4.455449599999995e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 2.710558015999997e9, V = 5.1570311111111057e8, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 2.7105363982222195e9, V = 5.1558591999999946e8, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = -2.2804378879999976e9, V = 3.2883723519999967e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 3.4977656675555515e9, V = -6.492943324444438e8, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 7.872002471111102e8, V = -1.164999438222221e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 3.497740145777774e9, V = -6.494127164444437e8, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 2.3015257599999976e9, V = 5.482690303999994e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 8.027784391111103e9, V = 1.5571086684444427e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 5.317234005333327e9, V = 1.0414041991111101e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 8.027758634666658e9, V = 1.556993443555554e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 4.530021788444439e9, V = 2.206406378666664e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = -5.744238079999993e9, V = 3.9470612479999957e9, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 23313.64084201386, V = 117719.41384548598, Ti = 5.449999988079071, Fr = 2.27070703125e11)

(U = 5.924709319111105e9, V = -3.8452848639999957e9, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 5.924688497777772e9, V = -3.845403854222218e9, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 3.08089890133333e9, V = -4.402725802666661e9, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 2.244918698666664e9, V = -3.2499217208888855e9, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 2.8438056746666636e9, V = 5.57437258666666e8, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 2.8437909475555525e9, V = 5.573208195555549e8, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 3.6797841706666627e9, V = -5.953657653333327e8, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 3.679770524444441e9, V = -5.954820355555549e8, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 8.35980113777777e8, V = -1.1528026026666653e9, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = 19414.168077256923, V = 118040.4856770832, Ti = 5.716666638851166, Fr = 2.27070703125e11)

(U = -92093.64322916657, V = -1.5751088611111094e6, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = 3.081715143111108e9, V = -4.344833365333329e9, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = 6.07242564266666e9, V = -3.732578396444441e9, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = 2.190544647111109e9, V = -3.208251676444441e9, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = 6.05836305066666e9, V = -3.7438256071111073e9, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = -3.0829770239999967e9, V = 4.34976796444444e9, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = 2.99071138133333e9, V = 6.122509119999994e8, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = -2.1976609848888865e9, V = 3.211308942222219e9, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = 3.881882190222218e9, V = -5.2432489066666615e8, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = 8.911678026666657e8, V = -1.1365769386666653e9, Ti = 6.049999952316284, Fr = 2.27070703125e11)

(U = -150875.45052083317, V = -1.5769051249999984e6, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 6.157184056888882e9, V = -3.6406887111111073e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 6.157173617777771e9, V = -3.6408075306666627e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 2.1349524657777758e9, V = -3.175750087111108e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 3.065380067555552e9, V = -4.298637105777774e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 6.14621098666666e9, V = -3.6535959466666627e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = -3.0691708159999967e9, V = 4.304277162666662e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 3.091809799111108e9, V = 6.579570684444437e8, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 3.091795150222219e9, V = 6.578363697777771e8, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = -2.1445705386666644e9, V = 3.1792032426666636e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 4.0222341617777734e9, V = -4.6493393599999946e8, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 4.0222294044444404e9, V = -4.650499119999995e8, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 9.304277457777768e8, V = -1.1228893119999988e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = -6.146197247999993e9, V = 3.653717759999996e9, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 10322.971652560753, V = 118544.06944444432, Ti = 6.316666603088379, Fr = 2.27070703125e11)

(U = 6.211784632888882e9, V = -3.5477483875555515e9, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 6.211780195555549e9, V = -3.547863480888885e9, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 2.068902503111109e9, V = -3.1441626808888855e9, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 3.034031331555552e9, V = -4.2528075377777734e9, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 3.177752227555552e9, V = 7.050634364444437e8, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 3.177752810666663e9, V = 7.049488924444437e8, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 4.1428866915555515e9, V = -4.035797448888885e8, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 9.651305439999989e8, V = -1.108645550222221e9, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 4.1428811519999957e9, V = -4.036979662222218e8, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = 6186.6973334418335, V = 118668.12304687487, Ti = 6.583333253860474, Fr = 2.27070703125e11)

(U = -266936.3645833331, V = -1.583141166666665e6, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 6.235954645333326e9, V = -3.4543274026666627e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 2.987818652444441e9, V = -4.207558826666662e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 6.235956423111104e9, V = -3.454211199999996e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 1.9927185599999979e9, V = -3.1136442239999967e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 6.23440708266666e9, V = -3.468812543999996e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = -2.9972223146666636e9, V = 4.213749674666662e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 3.2481352177777743e9, V = 7.533482702222215e8, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 3.2481338097777743e9, V = 7.532299875555547e8, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = -2.006915669333331e9, V = 3.1172445866666636e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 4.243231630222218e9, V = -3.4069073333333296e8, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 4.243239466666662e9, V = -3.4056780622222185e8, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 9.951025973333323e8, V = -1.0939171839999988e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = -6.23440452266666e9, V = 3.4689294506666627e9, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = 2020.1427137586786, V = 118729.78884548598, Ti = 6.849999904632568, Fr = 2.27070703125e11)

(U = -343950.03624999966, V = -1.589413219999998e6, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 6.223212003555549e9, V = -3.337163527111108e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 6.223215416888882e9, V = -3.337282766222219e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 2.909506687999997e9, V = -4.152156949333329e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 1.8838133048888867e9, V = -3.07722722133333e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 6.225276682239994e9, V = -3.340931624959996e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = -2.913978071039997e9, V = 4.1530716876799955e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 3.313704135111108e9, V = 8.14994561777777e8, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 3.3137085368888855e9, V = 8.148773351111102e8, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = -1.889491799039998e9, V = 3.077254901759997e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 4.339403889777773e9, V = -2.5993809599999973e8, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 4.339408696888885e9, V = -2.6005522088888863e8, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = 1.0256997831111101e9, V = -1.0749325617777767e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = -6.225278402559994e9, V = 3.3410462617599964e9, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = -3199.665127224389, V = 118718.70724826376, Ti = 7.183333396911621, Fr = 2.27070703125e11)

(U = -401459.5972222218, V = -1.5953378194444429e6, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 6.178709461333326e9, V = -3.243972359111108e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 2.8307861475555525e9, V = -4.1090220657777734e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 6.178720483555549e9, V = -3.244096682666663e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 1.786251743999998e9, V = -3.049647466666663e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 6.179113016888882e9, V = -3.242380138666663e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 3.347918890666663e9, V = 8.650494186666657e8, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = -2.8311915662222195e9, V = 4.107428693333329e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 3.347926620444441e9, V = 8.649315555555546e8, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = -1.7866596017777758e9, V = 3.048053795555552e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 4.392453788444439e9, V = -1.9432878977777755e8, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 4.392462890666661e9, V = -1.944448759999998e8, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = 1.0445360586666656e9, V = -1.0593764586666656e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = -6.179119544888882e9, V = 3.2424975217777743e9, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = -7359.556450737839, V = 118639.36892361098, Ti = 7.450000047683716, Fr = 2.27070703125e11)

(U = -453840.1640624995, V = -1.6017645034722206e6, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 2.7382116835555525e9, V = -4.0671804444444404e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 6.103944163555549e9, V = -3.151683946666663e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 1.6799544319999983e9, V = -3.023603527111108e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 6.103956750222216e9, V = -3.1518036479999967e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 6.10439825066666e9, V = -3.150082410666663e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 3.3657344497777743e9, V = 9.154982524444435e8, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = -2.7386646755555525e9, V = 4.065578346666662e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 3.365744974222219e9, V = 9.153749564444435e8, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = -1.6804040142222204e9, V = 3.02200078933333e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 4.423988807111106e9, V = -1.2808080399999987e8, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 4.424001663999995e9, V = -1.2819521311111099e8, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = 1.0582553617777767e9, V = -1.0435755999999989e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = -6.104410581333326e9, V = 3.150201891555552e9, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = -11483.574490017349, V = 118497.78168402765, Ti = 7.7166666984558105, Fr = 2.27070703125e11)

(U = -504000.3645833328, V = -1.6089620833333316e6, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 5.999277468444439e9, V = -3.060741980444441e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 2.6322108302222195e9, V = -4.0268253795555515e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 5.999288348444438e9, V = -3.060856782222219e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 1.565423818666665e9, V = -2.999217870222219e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 5.999780252444438e9, V = -3.0591319537777743e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = -2.6327225742222195e9, V = 4.025219150222218e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 3.3670615039999967e9, V = 9.660856764444433e8, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 3.367077162666663e9, V = 9.659668408888879e8, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 4.433867007999995e9, V = -6.164012344444438e7, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = -1.5659301759999983e9, V = 2.99760936533333e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 4.433851406222218e9, V = -6.1523151111111045e7, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = 1.0667900266666656e9, V = -1.0276084337777767e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = -5.999801315555549e9, V = 3.059254300444441e9, Ti = 7.983333349227905, Fr = 2.27070703125e11)

(U = -15551.297851562484, V = 118294.64453124987, Ti = 7.983333349227905, Fr = 2.27070703125e11)

)

)

IdealInstrumentModelData Products: Comrade.EHTLogClosureAmplitudeDatumComrade.EHTClosurePhaseDatumReconstructing the Image

To reconstruct the image we will first use the MAP estimate. This is approach is basically a re-implentation of regularized maximum likelihood (RML) imaging. However, unlike traditional RML imaging we also fit the regularizer hyperparameters, thanks to our interpretation of as our imaging prior as a hierarchical model.

To optimize our posterior Comrade provides the comrade_opt function. To use this functionality a user first needs to import Optimization.jl and the optimizer of choice. In this tutorial we will use Optiizations LBFGSB optimizer. We also need to import Enzyme to allow for automatic differentiation.

using Optimization, OptimizationLBFGSB

xopt, sol = comrade_opt(

post, LBFGSB();

maxiters = 2000, initial_params = prior_sample(rng, post)

);┌ Warning: Using fallback BLAS replacements for (["cblas_zdotc_sub64_"]), performance may be degraded

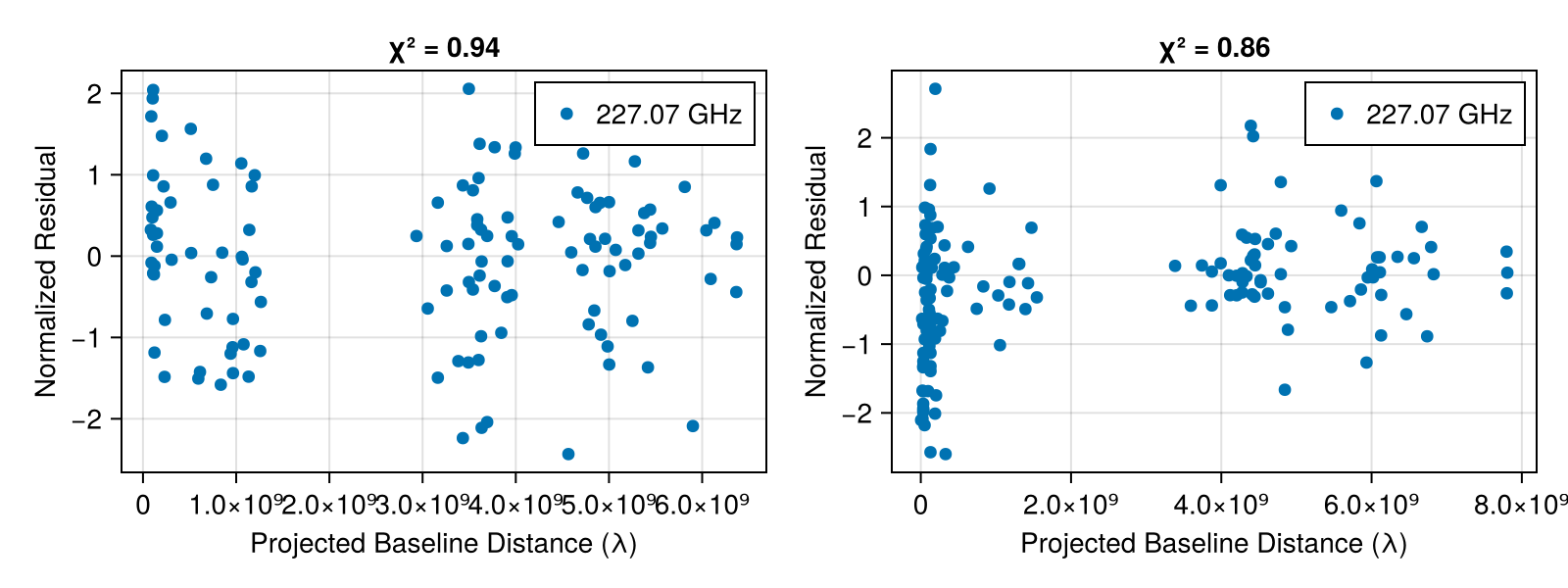

└ @ Enzyme.Compiler ~/.julia/packages/Enzyme/uGc5f/src/compiler.jl:5302First we will evaluate our fit by plotting the residuals

using CairoMakie

res = residuals(post, xopt)

fig = Figure(; size = (800, 300))

plotfields!(fig[1, 1], res[1], :uvdist, :res);

plotfields!(fig[1, 2], res[2], :uvdist, :res);

fig |> DisplayAs.PNG |> DisplayAs.Text

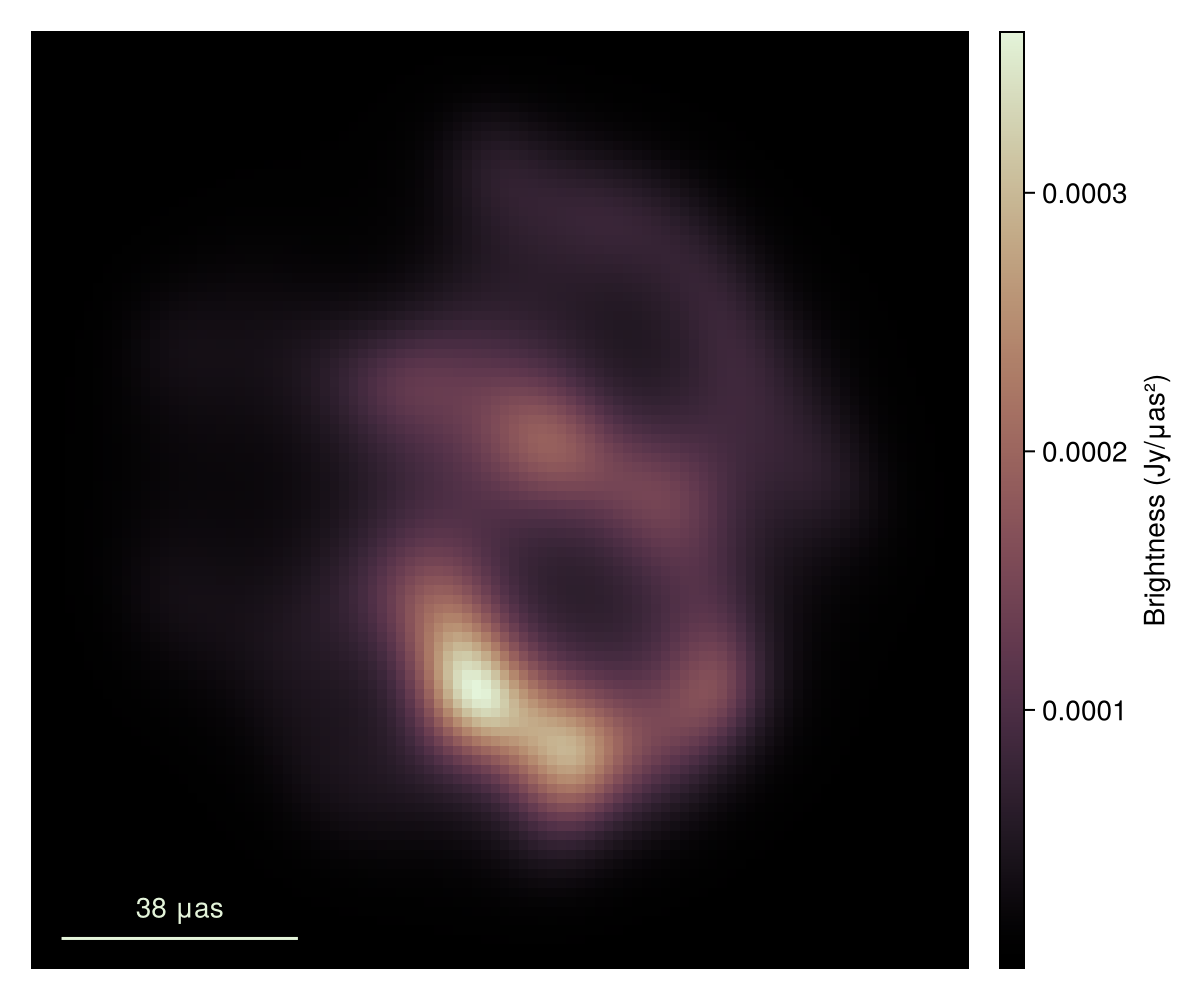

Now let's plot the MAP estimate.

g = imagepixels(μas2rad(150.0), μas2rad(150.0), 100, 100)

img = intensitymap(skymodel(post, xopt), g)

fig = imageviz(img, size = (600, 500));

That doesn't look great. This is pretty common for the sparse EHT data. In this case the MAP often drastically overfits the data, producing a image filled with artifacts. In addition, we note that the MAP itself is not invariant to the model parameterization. Namely, if we changed our prior to use a fully centered parameterization we would get a very different image. Fortunately, these issues go away when we sample from the posterior, and construct expectations of the posterior, like the mean image.

To sample from the posterior we will use HMC and more specifically the NUTS algorithm. For information about NUTS see Michael Betancourt's notes.

Note

For our metric we use a diagonal matrix due to easier tuning.

using AdvancedHMC

out = sample(rng, post, NUTS(0.8), 700; n_adapts = 500, saveto = DiskStore(), initial_params = xopt);

chain = load_samples(out)PosteriorSamples

Samples size: (700,)

sampler used: AHMC

Mean

┌────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ sky │

│ @NamedTuple{c::@NamedTuple{params::Matrix{Float64}, hyperparams::Float64}, σimg::Float64, fg::Float64} │

├────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┤

│ (c = (params = [0.0199618 0.0422194 … -0.0396364 -0.0272439; 0.0706745 0.103604 … -0.053241 -0.0444997; … ; -0.00948032 -0.0568334 … 0.0378519 0.0017399; -0.0392743 -0.0399659 … 0.0277642 -0.0060918], hyperparams = 28.783), σimg = 1.34568, fg = 0.165069) │

└────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

Std. Dev.

┌──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┐

│ sky │

│ @NamedTuple{c::@NamedTuple{params::Matrix{Float64}, hyperparams::Float64}, σimg::Float64, fg::Float64} │

├──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┤

│ (c = (params = [0.568036 0.627849 … 0.599974 0.571879; 0.572201 0.653871 … 0.647181 0.578457; … ; 0.57412 0.607565 … 0.642903 0.603154; 0.528004 0.601965 … 0.580968 0.5446], hyperparams = 16.019), σimg = 0.182581, fg = 0.103547) │

└──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘Warning

This should be run for longer!

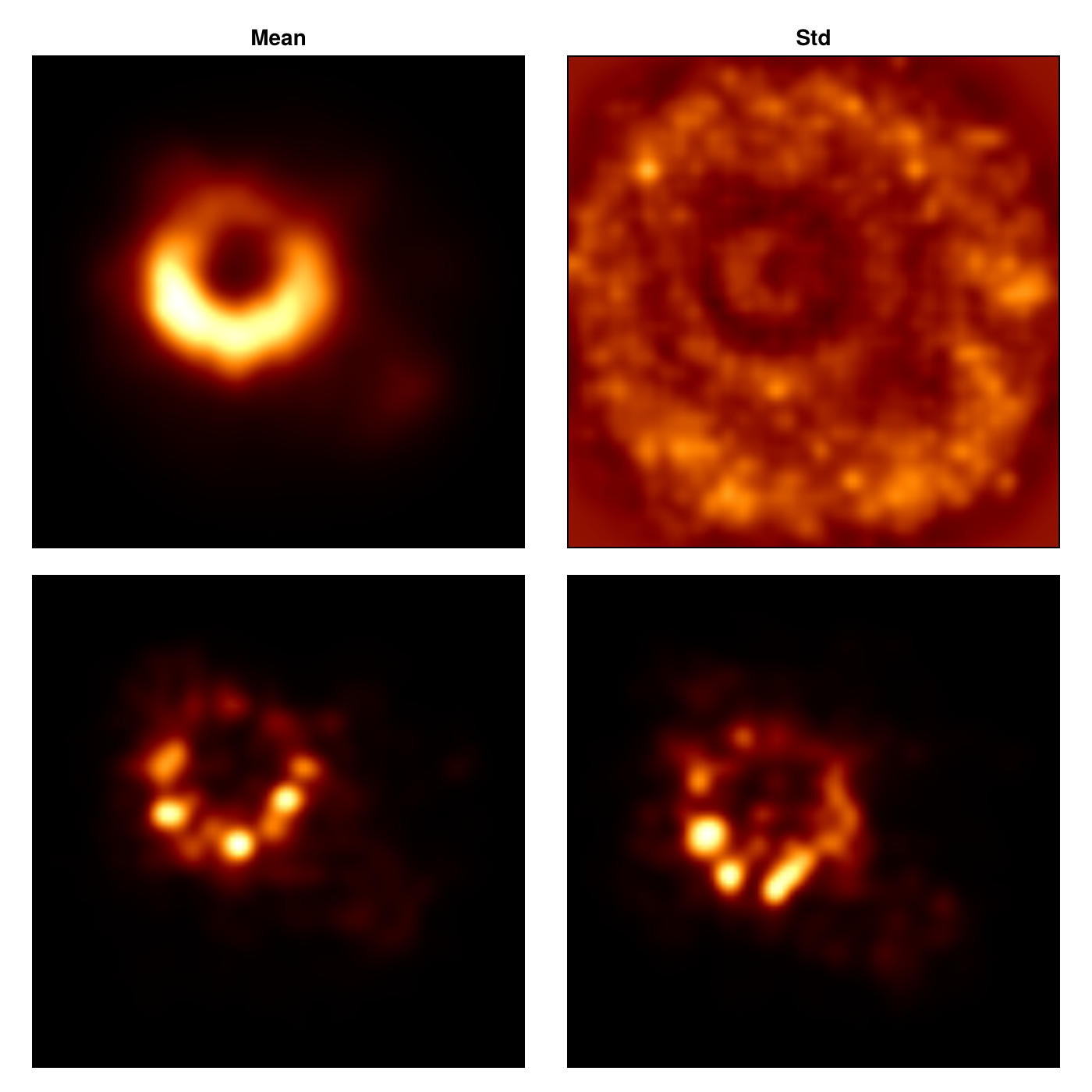

Now that we have our posterior, we can assess which parts of the image are strongly inferred by the data. This is rather unique to Comrade where more traditional imaging algorithms like CLEAN and RML are inherently unable to assess uncertainty in their reconstructions.

To explore our posterior let's first create images from a bunch of draws from the posterior

msamples = skymodel.(Ref(post), chain[501:2:end]);The mean image is then given by

using StatsBase

imgs = intensitymap.(msamples, Ref(g))

mimg = mean(imgs)

simg = std(imgs)

fig = Figure(; resolution = (700, 700));

axs = [Axis(fig[i, j], xreversed = true, aspect = 1) for i in 1:2, j in 1:2]

image!(axs[1, 1], mimg, colormap = :afmhot); axs[1, 1].title = "Mean"

image!(axs[1, 2], simg ./ (max.(mimg, 1.0e-8)), colorrange = (0.0, 2.0), colormap = :afmhot);axs[1, 2].title = "Std"

image!(axs[2, 1], imgs[1], colormap = :afmhot);

image!(axs[2, 2], imgs[end], colormap = :afmhot);

hidedecorations!.(axs)

fig |> DisplayAs.PNG |> DisplayAs.Text

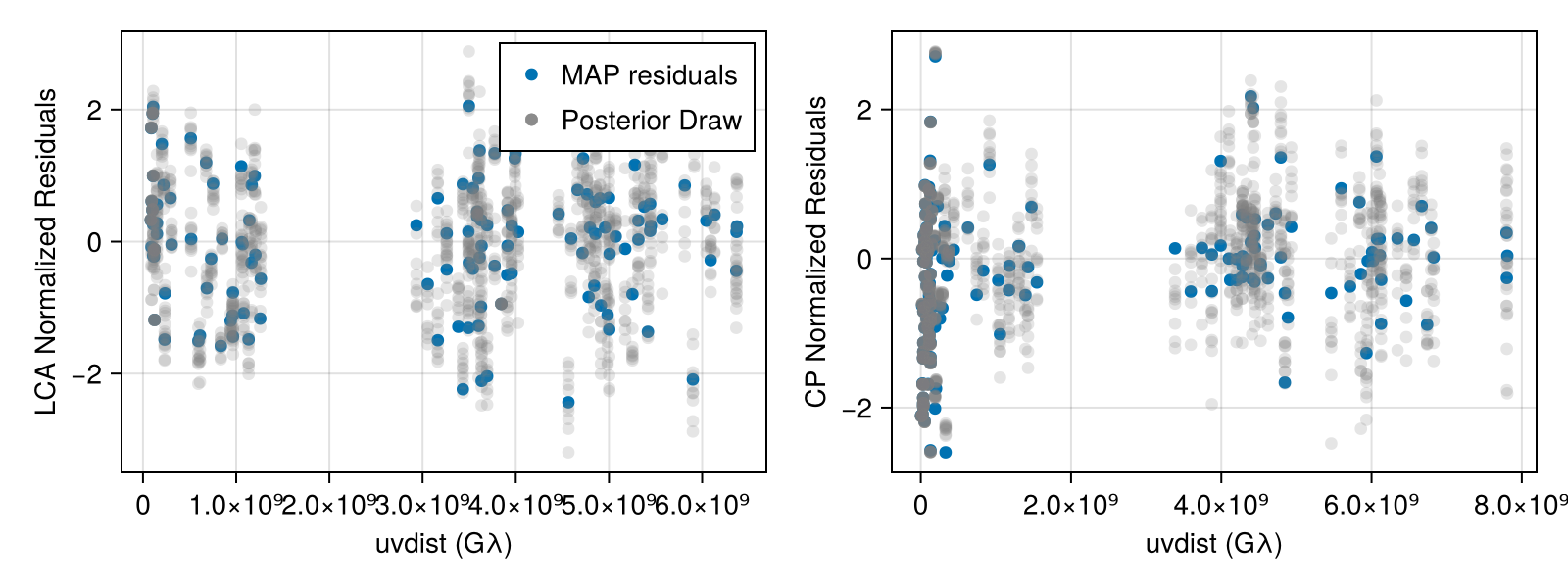

Now let's see whether our residuals look better.

fig = Figure(; size = (800, 300))

ax1, = baselineplot(fig[1, 1], res[1], :uvdist, :res, label = "MAP residuals", axis = (ylabel = "LCA Normalized Residuals", xlabel = "uvdist (Gλ)"))

ax2, = baselineplot(fig[1, 2], res[2], :uvdist, :res, label = "MAP residuals", axis = (ylabel = "CP Normalized Residuals", xlabel = "uvdist (Gλ)"))

for s in sample(chain[501:end], 10)

rs = residuals(post, s)

baselineplot!(ax1, rs[1], :uvdist, :res, color = :grey, alpha = 0.2, label = "Posterior Draw")

baselineplot!(ax2, rs[2], :uvdist, :res, color = :grey, alpha = 0.2, label = "Posterior Draw")

end

axislegend(ax1, merge = true)

fig |> DisplayAs.PNG |> DisplayAs.Text

And viola, you have a quick and preliminary image of M87 fitting only closure products. For a publication-level version we would recommend

Running the chain longer and multiple times to properly assess things like ESS and R̂ (see Geometric Modeling of EHT Data)

Fitting gains. Typically gain amplitudes are good to 10-20% for the EHT not the infinite uncertainty closures implicitly assume

This page was generated using Literate.jl.